Proxmox VE 云桌面实战 ① - 配置NVIDIA vGPU

前言

随着互联网设施的建设和云计算的发展,“云桌面”、“云游戏”等概念已逐渐走入人们的视线。无论如何,它都改变了我们理解硬件性能和游戏体验的方式。本系列文章将介绍如何使用Proxmox VE构建一个简单的,支持Windows、Linux的云桌面系统。

认识vGPU

要为VM添加图形功能,一般有三种方法:

- 软件模拟(如“标准VGA”,“VMware SVGA II”)

- PCI Passthrough

- vGPU(mDev / sr-IOV)

性能为 PCI Passthrough > vGPU > 软件模拟

对于vGPU,市面上有三种方案:

| Intel | AMD | NVIDIA |

|---|---|---|

| GVT-g | MxGPU | NVIDIA vGPU |

笔者采用的是NVIDIA Tesla P40这款GPU,故本文只介绍NVIDIA vGPU。

vGPU架构

从上图可以看出,NVIDIA vGPU的实现由软硬件协同而成,硬件上有GPU,软件上有NVIDIA vGPU Manager。

vGPU支持的显卡

一般情况下,vGPU只支持数据中心级的Tesla GPU和部分Quadro GPU,大致如下:

| 架构 | 型号 |

|---|---|

| Maxwell | M6, M10, M60 |

| Pascal | P4, P6, P40, P100, P100 12GB |

| Volta | V100 |

| Turing | T4, RTX 6000, RTX 6000 passive, RTX 8000, RTX 8000 passive |

| Ampere | A2, A10, A16, A40, RTX A5000, RTX A5500, RTX A6000 |

| Ada | L4, L40, RTX 6000 Ada |

不过有热心大神开发了解锁补丁,可以在9,10,20系显卡上解锁vGPU功能,具体链接及教程如下:

{% link https://github.com/mbilker/vgpu_unlock-rs %}

{% link https://gitlab.com/polloloco/vgpu-proxmox %}

支持的平台

根据官方文档,目前支持的平台如下:

- Citrix XenServer

- Linux with KVM(比如Proxmox VE)

- Microsoft Azure Stack HCI

- Microsoft Windows Server(即Hyper-V)

- Nutanix AHV

- VMware vSphere ESXi

vGPU类型

NVIDIA官方介绍如下:

- vCS:NVIDIA 虚拟计算服务器,加速基于 KVM 的基础架构上的虚拟化 AI 计算工作负载。(如

GRID P40-1C) - vWS: NVIDIA RTX 虚拟工作站,适用于使用图形应用程序的创意和技术专业人士的虚拟工作站。(如

GRID P40-1Q) - vPC: NVIDIA 虚拟 PC,适用于使用办公效率应用程序和多媒体的知识工作者的虚拟桌面 (VDI)。(如

GRID P40-1B) - vApp: NVIDIA 虚拟应用程序,采用远程桌面会话主机 (RDSH) 解决方案的应用程序流。(如

GRID P40-1A)

准备vGPU驱动

{% note color:warning 注意 本文中使用的Proxmox VE版本为8.0.3,内核版本为6.2.16-4-pve %}

移除企业源

Proxmox VE默认使用企业源,如果没有订阅密钥是没有权限访问的

echo "deb https://mirrors.tuna.tsinghua.edu.cn/proxmox/debian bookworm pve-no-subscription" >> /etc/apt/sources.list.d/pve-no-subscription.list

rm /etc/apt/sources.list.d/pve-enterprise.list更新系统

apt update

apt dist-upgrade

reboot保证内核为最新版本

安装必要工具

apt install -y git build-essential dkms pve-headers mdevctl其中dkms保证在每次更新内核后会自动编译适应的驱动模块

启用IOMMU

一般系统

编辑/etc/default/grub,找到GRUB_CMDLINE_LINUX_DEFAULT="quiet",在其后添加:

Intel

intel_iommu=on iommu=ptAMD

amd_iommu=on iommu=pt结果应该如下(Intel):

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"ZFS上的系统

编辑/etc/kernel/cmdline,找到root=ZFS=rpool/ROOT/pve-1 boot=zfs,在其后添加:

Intel

intel_iommu=on iommu=ptAMD

amd_iommu=on iommu=pt结果应该如下(Intel):

root=ZFS=rpool/ROOT/pve-1 boot=zfs intel_iommu=on iommu=pt现在更新引导配置:

proxmox-boot-tool refresh禁止nouveau驱动

nouveau是一个开源的NVIDIA显卡驱动,它与vGPU驱动冲突,必须禁用它

echo "blacklist nouveau" >> /etc/modprobe.d/blacklist-nouveau.conf

echo "options nouveau modeset=0" >> /etc/modprobe.d/blacklist-nouveau.conf加载vfio模块

编辑/etc/modules,添加如下内容:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd更新initramfs

update-initramfs -u -k all重启

reboot检查IOMMU是否启用

重启后输入:

dmesg | grep -e DMAR -e IOMMU在我的C602双路服务器上,它输出以下内容:

{% folding open:false %}

root@pve-r720:~# dmesg | grep -e DMAR -e IOMMU

[ 0.000000] Warning: PCIe ACS overrides enabled; This may allow non-IOMMU protected peer-to-peer DMA

[ 0.012034] ACPI: DMAR 0x000000007D3346F4 000158 (v01 DELL PE_SC3 00000001 DELL 00000001)

[ 0.012084] ACPI: Reserving DMAR table memory at [mem 0x7d3346f4-0x7d33484b]

[ 0.591102] DMAR: IOMMU enabled

[ 1.347160] DMAR: Host address width 46

[ 1.347161] DMAR: DRHD base: 0x000000d1100000 flags: 0x0

[ 1.347168] DMAR: dmar0: reg_base_addr d1100000 ver 1:0 cap d2078c106f0466 ecap f020de

[ 1.347170] DMAR: DRHD base: 0x000000dd900000 flags: 0x1

[ 1.347175] DMAR: dmar1: reg_base_addr dd900000 ver 1:0 cap d2078c106f0466 ecap f020de

[ 1.347177] DMAR: RMRR base: 0x0000007f458000 end: 0x0000007f46ffff

[ 1.347178] DMAR: RMRR base: 0x0000007f450000 end: 0x0000007f450fff

[ 1.347180] DMAR: RMRR base: 0x0000007f452000 end: 0x0000007f452fff

[ 1.347181] DMAR: ATSR flags: 0x0

[ 1.347183] DMAR-IR: IOAPIC id 2 under DRHD base 0xd1100000 IOMMU 0

[ 1.347185] DMAR-IR: IOAPIC id 0 under DRHD base 0xdd900000 IOMMU 1

[ 1.347186] DMAR-IR: IOAPIC id 1 under DRHD base 0xdd900000 IOMMU 1

[ 1.347187] DMAR-IR: HPET id 0 under DRHD base 0xdd900000

[ 1.347188] DMAR-IR: x2apic is disabled because BIOS sets x2apic opt out bit.

[ 1.347189] DMAR-IR: Use 'intremap=no_x2apic_optout' to override the BIOS setting.

[ 1.347933] DMAR-IR: Enabled IRQ remapping in xapic mode

[ 2.304979] DMAR: No SATC found

[ 2.304981] DMAR: dmar0: Using Queued invalidation

[ 2.304990] DMAR: dmar1: Using Queued invalidation

[ 2.310738] DMAR: Intel(R) Virtualization Technology for Directed I/O{% endfolding %}

关键在于DMAR: IOMMU enabled一行,此行表明IOMMU已启用。

安装vGPU驱动

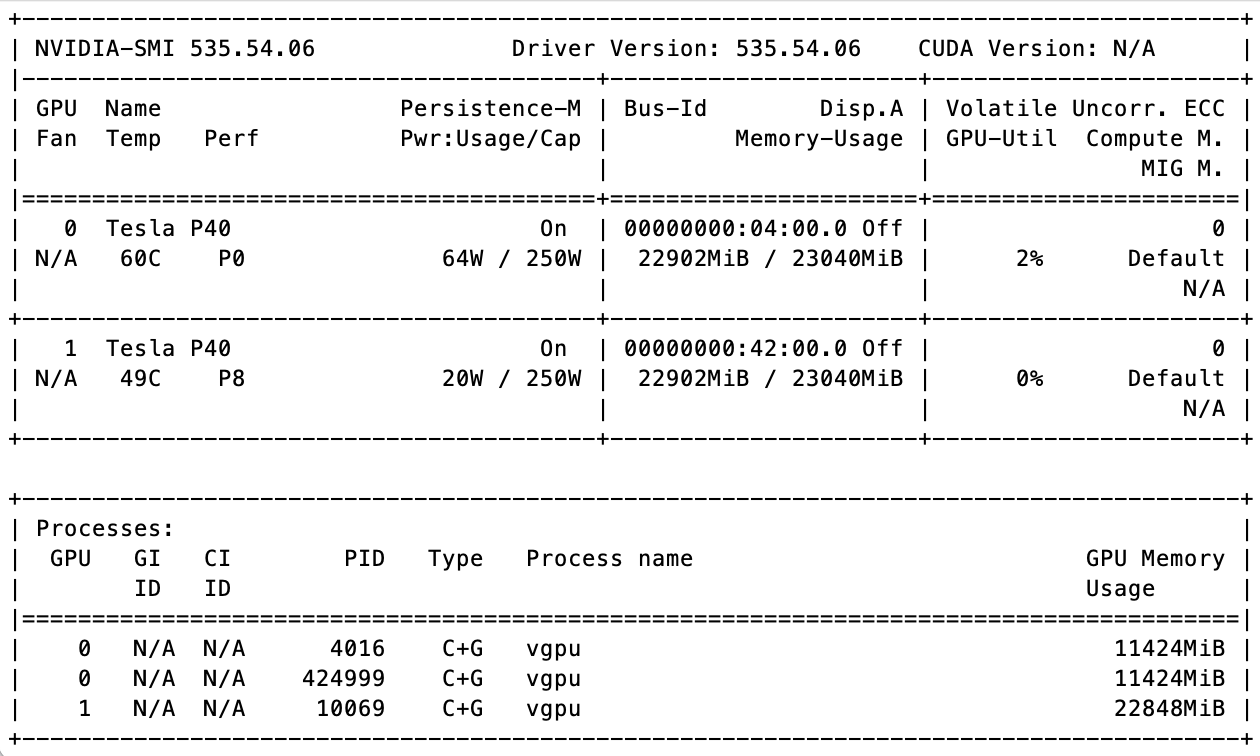

{% note color:warning 注意 本节使用535.54.06版驱动,GRID版本为16.0。 %}

Proxmox VE作为KVM平台,自然需要KVM版的vGPU驱动

目前仅有16.0版的vGPU驱动支持新的6.2内核,15.3、15.1等版本最高支持到PVE 7

很不幸,NVIDIA不会让你随便下载高贵的GRID驱动(fuck-you-nvidia.jpg),你需要在这里注册一个免费的vGPU许可来下载驱动。

{% note color:warning 注意 申请免费许可时,请勿使用@gmail.com、@qq.com等免费邮箱,否则可能会面临人工审核,我的建议是使用自己域名的邮箱。 %}

{% note color:green 提示 觉得注册太麻烦?你也可以去佛西大佬的博客下载 %}

下载完成后,解压zip文件,在Host_Drivers文件夹中找到NVIDIA-Linux-x86_64-535.54.06-vgpu-kvm.run,将其上传到Proxmox VE中

在Proxmox VE中执行以下命令:

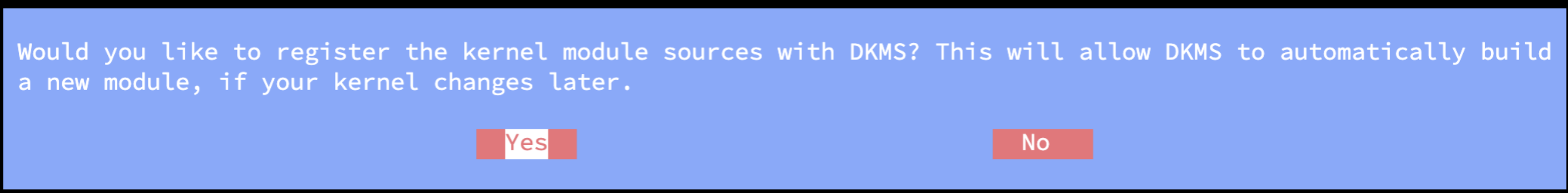

./NVIDIA-Linux-x86_64-535.54.06-vgpu-kvm.run --dkms安装完成后,提示是否要注册DKMS模块,一定要选择Yes,这样在升级内核后,系统会重新编译适应新内核的驱动。

{% note color:warning 注意 由于Proxmox VE使用apt update升级系统时不会升级pve-headers,因此务必在升级系统前安装新的pve-headers,避免造成dkms编译失败。 %}

重启后执行nvidia-smi,在我的双Tesla P40机器上,输出以下内容(无视那些“vgpu”进程):

执行mdevctl types查看mdev类型:

{% folding open:false %}

root@pve-r720:~# mdevctl types

0000:04:00.0

nvidia-156

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2B

Description: num_heads=4, frl_config=45, framebuffer=2048M, max_resolution=5120x2880, max_instance=12

nvidia-215

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2B4

Description: num_heads=4, frl_config=45, framebuffer=2048M, max_resolution=5120x2880, max_instance=12

nvidia-241

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1B4

Description: num_heads=4, frl_config=45, framebuffer=1024M, max_resolution=5120x2880, max_instance=24

nvidia-46

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1Q

Description: num_heads=4, frl_config=60, framebuffer=1024M, max_resolution=5120x2880, max_instance=24

nvidia-47

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2Q

Description: num_heads=4, frl_config=60, framebuffer=2048M, max_resolution=7680x4320, max_instance=12

nvidia-48

Available instances: 0

Device API: vfio-pci

Name: GRID P40-3Q

Description: num_heads=4, frl_config=60, framebuffer=3072M, max_resolution=7680x4320, max_instance=8

nvidia-49

Available instances: 0

Device API: vfio-pci

Name: GRID P40-4Q

Description: num_heads=4, frl_config=60, framebuffer=4096M, max_resolution=7680x4320, max_instance=6

nvidia-50

Available instances: 0

Device API: vfio-pci

Name: GRID P40-6Q

Description: num_heads=4, frl_config=60, framebuffer=6144M, max_resolution=7680x4320, max_instance=4

nvidia-51

Available instances: 0

Device API: vfio-pci

Name: GRID P40-8Q

Description: num_heads=4, frl_config=60, framebuffer=8192M, max_resolution=7680x4320, max_instance=3

nvidia-52

Available instances: 0

Device API: vfio-pci

Name: GRID P40-12Q

Description: num_heads=4, frl_config=60, framebuffer=12288M, max_resolution=7680x4320, max_instance=2

nvidia-53

Available instances: 0

Device API: vfio-pci

Name: GRID P40-24Q

Description: num_heads=4, frl_config=60, framebuffer=24576M, max_resolution=7680x4320, max_instance=1

nvidia-54

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1A

Description: num_heads=1, frl_config=60, framebuffer=1024M, max_resolution=1280x1024, max_instance=24

nvidia-55

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2A

Description: num_heads=1, frl_config=60, framebuffer=2048M, max_resolution=1280x1024, max_instance=12

nvidia-56

Available instances: 0

Device API: vfio-pci

Name: GRID P40-3A

Description: num_heads=1, frl_config=60, framebuffer=3072M, max_resolution=1280x1024, max_instance=8

nvidia-57

Available instances: 0

Device API: vfio-pci

Name: GRID P40-4A

Description: num_heads=1, frl_config=60, framebuffer=4096M, max_resolution=1280x1024, max_instance=6

nvidia-58

Available instances: 0

Device API: vfio-pci

Name: GRID P40-6A

Description: num_heads=1, frl_config=60, framebuffer=6144M, max_resolution=1280x1024, max_instance=4

nvidia-59

Available instances: 0

Device API: vfio-pci

Name: GRID P40-8A

Description: num_heads=1, frl_config=60, framebuffer=8192M, max_resolution=1280x1024, max_instance=3

nvidia-60

Available instances: 0

Device API: vfio-pci

Name: GRID P40-12A

Description: num_heads=1, frl_config=60, framebuffer=12288M, max_resolution=1280x1024, max_instance=2

nvidia-61

Available instances: 0

Device API: vfio-pci

Name: GRID P40-24A

Description: num_heads=1, frl_config=60, framebuffer=24576M, max_resolution=1280x1024, max_instance=1

nvidia-62

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1B

Description: num_heads=4, frl_config=45, framebuffer=1024M, max_resolution=5120x2880, max_instance=24

0000:42:00.0

nvidia-156

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2B

Description: num_heads=4, frl_config=45, framebuffer=2048M, max_resolution=5120x2880, max_instance=12

nvidia-215

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2B4

Description: num_heads=4, frl_config=45, framebuffer=2048M, max_resolution=5120x2880, max_instance=12

nvidia-241

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1B4

Description: num_heads=4, frl_config=45, framebuffer=1024M, max_resolution=5120x2880, max_instance=24

nvidia-46

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1Q

Description: num_heads=4, frl_config=60, framebuffer=1024M, max_resolution=5120x2880, max_instance=24

nvidia-47

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2Q

Description: num_heads=4, frl_config=60, framebuffer=2048M, max_resolution=7680x4320, max_instance=12

nvidia-48

Available instances: 0

Device API: vfio-pci

Name: GRID P40-3Q

Description: num_heads=4, frl_config=60, framebuffer=3072M, max_resolution=7680x4320, max_instance=8

nvidia-49

Available instances: 0

Device API: vfio-pci

Name: GRID P40-4Q

Description: num_heads=4, frl_config=60, framebuffer=4096M, max_resolution=7680x4320, max_instance=6

nvidia-50

Available instances: 0

Device API: vfio-pci

Name: GRID P40-6Q

Description: num_heads=4, frl_config=60, framebuffer=6144M, max_resolution=7680x4320, max_instance=4

nvidia-51

Available instances: 0

Device API: vfio-pci

Name: GRID P40-8Q

Description: num_heads=4, frl_config=60, framebuffer=8192M, max_resolution=7680x4320, max_instance=3

nvidia-52

Available instances: 0

Device API: vfio-pci

Name: GRID P40-12Q

Description: num_heads=4, frl_config=60, framebuffer=12288M, max_resolution=7680x4320, max_instance=2

nvidia-53

Available instances: 0

Device API: vfio-pci

Name: GRID P40-24Q

Description: num_heads=4, frl_config=60, framebuffer=24576M, max_resolution=7680x4320, max_instance=1

nvidia-54

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1A

Description: num_heads=1, frl_config=60, framebuffer=1024M, max_resolution=1280x1024, max_instance=24

nvidia-55

Available instances: 0

Device API: vfio-pci

Name: GRID P40-2A

Description: num_heads=1, frl_config=60, framebuffer=2048M, max_resolution=1280x1024, max_instance=12

nvidia-56

Available instances: 0

Device API: vfio-pci

Name: GRID P40-3A

Description: num_heads=1, frl_config=60, framebuffer=3072M, max_resolution=1280x1024, max_instance=8

nvidia-57

Available instances: 0

Device API: vfio-pci

Name: GRID P40-4A

Description: num_heads=1, frl_config=60, framebuffer=4096M, max_resolution=1280x1024, max_instance=6

nvidia-58

Available instances: 0

Device API: vfio-pci

Name: GRID P40-6A

Description: num_heads=1, frl_config=60, framebuffer=6144M, max_resolution=1280x1024, max_instance=4

nvidia-59

Available instances: 0

Device API: vfio-pci

Name: GRID P40-8A

Description: num_heads=1, frl_config=60, framebuffer=8192M, max_resolution=1280x1024, max_instance=3

nvidia-60

Available instances: 0

Device API: vfio-pci

Name: GRID P40-12A

Description: num_heads=1, frl_config=60, framebuffer=12288M, max_resolution=1280x1024, max_instance=2

nvidia-61

Available instances: 0

Device API: vfio-pci

Name: GRID P40-24A

Description: num_heads=1, frl_config=60, framebuffer=24576M, max_resolution=1280x1024, max_instance=1

nvidia-62

Available instances: 0

Device API: vfio-pci

Name: GRID P40-1B

Description: num_heads=4, frl_config=45, framebuffer=1024M, max_resolution=5120x2880, max_instance=24{% endfolding %}

配置vGPU授权服务器

众所周知,vGPU的授权费极其昂贵,不是富哥很难承受的起,很长一段时间内,众多玩家采用伪造PCI ID的形式来伪装成Quadro显卡,从而骗过NVIDIA的检测。但目前已经有成熟的开源vGPU许可服务器fastapi-dls可以直接使用了,官方还提供了Docker,这省了许多事。

安装容器

{% note color:green 提示 建议在虚拟机 / LXC中部署此Docker镜像,PVE中直接安装Docker可能会导致VM断网等问题 %}

# 拉取镜像

docker pull collinwebdesigns/fastapi-dls:latest

# 创建目录

mkdir -p /opt/fastapi-dls/cert

cd /opt/fastapi-dls/cert

# 生成公私钥

openssl genrsa -out /opt/fastapi-dls/cert/instance.private.pem 2048

openssl rsa -in /opt/fastapi-dls/cert/instance.private.pem -outform PEM -pubout -out /opt/fastapi-dls/cert/instance.public.pem

# 生成SSL证书

openssl req -x509 -nodes -days 3650 -newkey rsa:2048 -keyout /opt/fastapi-dls/cert/webserver.key -out /opt/fastapi-dls/cert/webserver.crt

# 创建容器, 1825是授权天数, YOUR_IP处填VM / LXC的IP

docker volume create dls-db

docker run -d --restart=always -e LEASE_EXPIRE_DAYS=1825 -e DLS_URL=<YOUR_IP> -e DLS_PORT=443 -p 443:443 -v /opt/fastapi-dls/cert:/app/cert -v dls-db:/app/database collinwebdesigns/fastapi-dls:latest自此,vGPU配置完成!

参考资料

NVIDIA Virtual GPU Software Documentation

佛西博客 - 在Proxmox VE 7.2 中开启vGPU_unlock,实现显卡虚拟化 (buduanwang.vip)